CAED-Agent: an Agentic Framework to Automate Simulation-Based Experimental Design

Under review for The Fourteenth International Conference on Learning Representations

Abstract

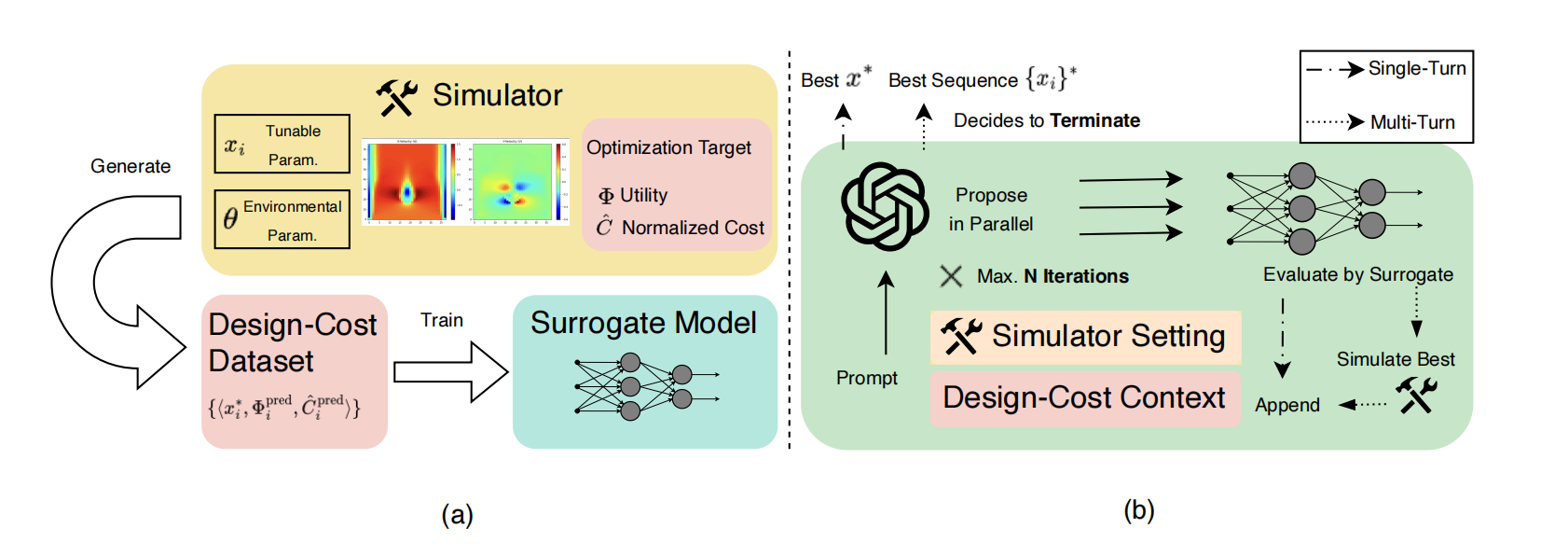

The adjustment of parameters for expensive computer simulations is a challenging and universal task in the scientific research pipeline. We refer to these problems as Cost-Aware Simulation Configuration Optimization (CASCO). Traditional approaches include a) brute force search, which is prohibitive for high-dimensional parameter combinations; b) Bayesian optimization, which struggles to generalize across setup variations and does not incorporate prior knowledge; c) case-by-case experts designs, which is effective but difficult to scale. Recent work on language models (LLMs) as scientific agents has shown an initial ability to combine pre-trained domain knowledge with tool calling, enabling workflow automation. Naturally, replacing the expert’s manual design with this automation seems to be a scalable remedy to general CAED problems. As will be shown in our empirical evaluations, LLMs lack cost awareness for parameter tuning tasks in scientific simulation, leading to poor and inefficient choices. Inference-time scaling approaches enable better exploration, but the massive additional simulator queries they incur add up to total cost and contradict the target of being efficient. To address this challenge, we propose the Cost-Aware Simulation Configuration Optimization Agent (CASCO-Agent), an agentic framework that combines inference-time scaling with the cost-efficiency feedback from a lightweight surrogate model for solving CASCO problems. Our experiments in three different simulation cases show that CASCO-Agent outperforms both Bayesian optimization and LLM baselines by significant margins, achieving success rates comparable to inference-time scaling with a ground truth simulator, while being far more cost-efficient.